AI Chatbot Tester: Advanced Testing Platform for Conversational AI

Developed by the Beontrack Solutions team, this advanced testing platform represents the cutting edge of conversational AI testing technology.

Testing chatbots effectively has never been more crucial. With the rise of Large Language Models (LLMs) and sophisticated conversational AI, developers need robust testing tools that can handle complex scenarios, role-based interactions, and knowledge base validation. Our AI Chatbot Tester provides exactly that - a comprehensive testing platform designed for modern chatbot development.

Overview

The AI Chatbot Tester is a web-based platform that allows developers to test their chatbots with advanced configurations, including:

- LLM Provider Integration - Support for multiple providers like Ollama

- Role-Based Testing - Upload custom roles or define manual instructions

- Knowledge Base Validation - Test with uploaded knowledge bases

- Execution Tracking - Detailed logs and traces for debugging

- Flexible Configuration - Customizable parameters for various testing scenarios

Key Features

🔧 Configuration Management

The platform provides comprehensive configuration options:

Chat URL Setup - Configure your chatbot's webhook endpoint - Support for various chatbot platforms and frameworks - Easy integration with existing chatbot infrastructure

LLM Provider Support - Ollama Integration - Built-in support for local LLM deployment - Model Selection - Choose from various models (e.g., gpt-oss-optimized:latest) - Custom LLM URLs - Connect to your preferred LLM endpoints - Turn Limitations - Set maximum conversation turns for controlled testing

👥 Role Configuration

One of the most powerful features is role-based testing:

Customer Role Source Options: - Upload File - Import role definitions from .txt, .md, or .json files - Manual Input - Define custom roles directly in the interface - AI-Generated Roles - Automatically generate customer personas with AI

Additional Role Instructions: - Add behavioral modifications (e.g., "provide incorrect phone number initially") - Define personality traits ("be impatient and ask many questions") - Set interaction styles ("be very polite and formal") - Create complex testing scenarios

📚 Knowledge Base Integration

Test your chatbot's knowledge retrieval capabilities:

Knowledge Base Source: - File Upload - Support for .txt, .md, and .json formats - Manual Input - Direct text input for quick testing - Fact-Checking Mode - Enable knowledge base validation during conversations

Fast-Checking Bot Responses: - Verify chatbot answers against uploaded knowledge - Ensure accuracy and consistency in responses - Identify knowledge gaps and incorrect information

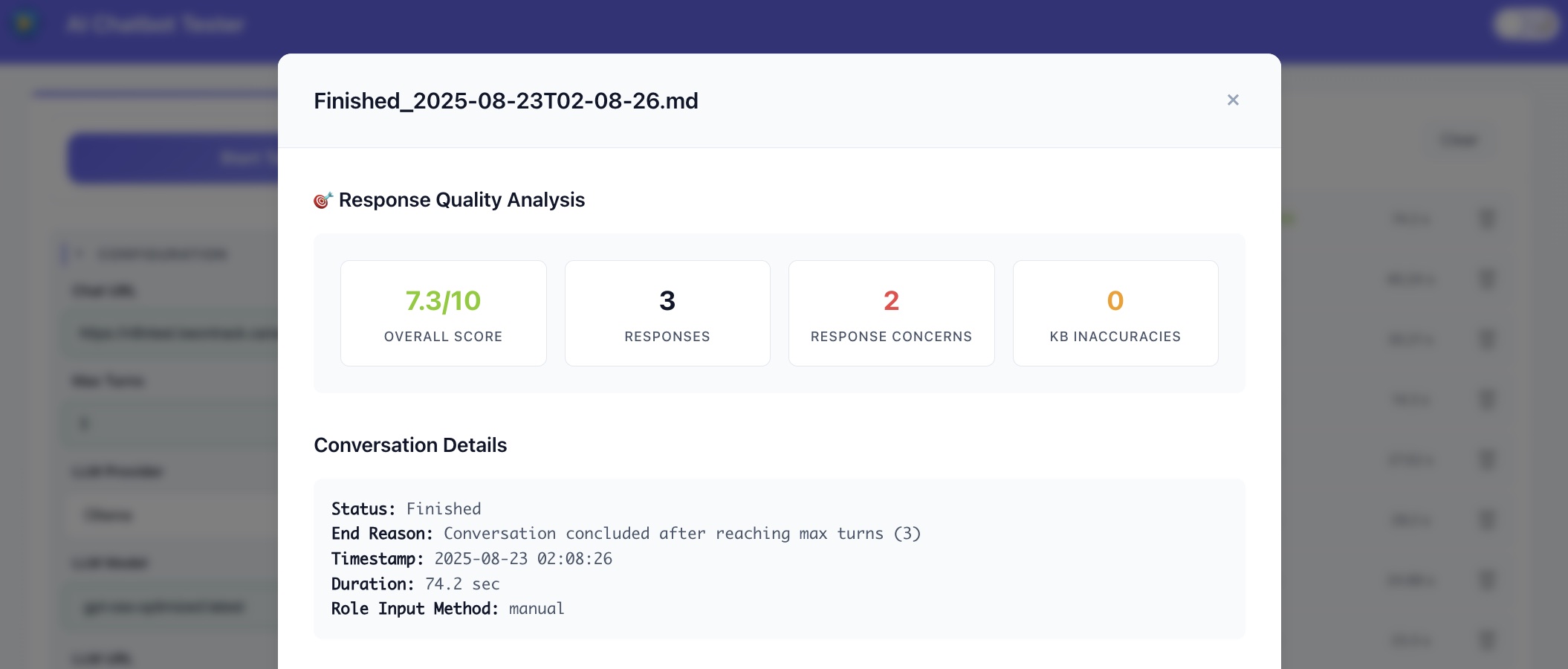

📊 Execution Monitoring

Comprehensive testing requires detailed monitoring:

Execution Log: - Real-time conversation tracking - Response time monitoring - Error detection and reporting - Turn-by-turn analysis

Traces: - Detailed execution traces for debugging - LLM interaction logs - Knowledge base query results - Role adherence tracking

How It Works

1. Configuration Setup

Start by configuring your testing environment: - Enter your chatbot's webhook URL - Select LLM provider and model - Set maximum turns for conversation limits - Configure LLM endpoint URL

2. Role Definition

Define the testing persona: - Upload a role file or input manually - Add specific behavioral instructions - Enable AI-generated roles if needed - Customize interaction patterns

3. Knowledge Base (Optional)

Enhance testing with knowledge validation: - Upload knowledge base files - Enable fact-checking mode - Define accuracy requirements - Set knowledge validation rules

4. Test Execution

Run comprehensive tests: - Click "Start Test" to begin - Monitor real-time execution logs - Review detailed traces - Analyze conversation flow

Use Cases

Customer Service Testing

- Test support chatbots with various customer personas

- Validate knowledge base accuracy for FAQ responses

- Ensure proper escalation procedures

- Test handling of complex customer scenarios

E-commerce Chatbots

- Simulate different buyer personas

- Test product recommendation accuracy

- Validate inventory and pricing information

- Ensure smooth checkout processes

Healthcare AI Assistants

- Test with patient personas and scenarios

- Validate medical knowledge accuracy

- Ensure compliance with healthcare regulations

- Test appointment scheduling and information retrieval

Educational Chatbots

- Test tutoring and learning assistance bots

- Validate educational content accuracy

- Test adaptive learning scenarios

- Ensure age-appropriate responses

Technical Specifications

Supported File Formats

- Text Files (.txt) - Plain text role definitions and knowledge bases

- Markdown Files (.md) - Structured content with formatting

- JSON Files (.json) - Structured data for complex configurations

LLM Integration

- Ollama Support - Local LLM deployment and testing

- Custom Endpoints - Flexible integration with various LLM providers

- Model Selection - Support for different model variants and configurations

Webhook Integration

- RESTful API Support - Standard HTTP webhook integration

- Custom Headers - Support for authentication and custom parameters

- Response Parsing - Intelligent parsing of chatbot responses

Advanced Testing Scenarios

Multi-Turn Conversations

Test complex conversation flows with: - Context maintenance across turns - Information persistence - Conversation state management - Natural conversation progression

Edge Case Testing

Identify and test edge cases: - Unexpected user inputs - System error scenarios - Timeout handling - Fallback responses

Performance Testing

Evaluate chatbot performance: - Response time analysis - Concurrent user simulation - Load testing capabilities - Resource usage monitoring

Benefits for Development Teams

Faster Development Cycles

- Automated testing reduces manual effort

- Quick iteration and feedback loops

- Early detection of issues

- Streamlined debugging process

Quality Assurance

- Comprehensive test coverage

- Consistent testing procedures

- Objective performance metrics

- Detailed reporting and analysis

Team Collaboration

- Shared testing configurations

- Standardized testing procedures

- Clear documentation and logs

- Easy knowledge sharing

Getting Started

Ready to test your chatbot? Here's how to begin:

- Access the Platform - Navigate to the AI Chatbot Tester interface

- Configure Settings - Set up your chatbot URL and LLM preferences

- Define Roles - Create or upload testing personas

- Add Knowledge - Upload relevant knowledge bases if needed

- Start Testing - Run your first test and analyze results

Best Practices

Test Design

- Create diverse and realistic personas

- Include edge cases and error scenarios

- Test both positive and negative paths

- Validate against real user data

Knowledge Management

- Keep knowledge bases updated

- Test with both correct and outdated information

- Validate fact-checking accuracy

- Monitor knowledge gaps

Result Analysis

- Review execution logs regularly

- Analyze conversation patterns

- Identify improvement opportunities

- Track performance metrics over time

Conclusion

The AI Chatbot Tester represents a significant advancement in conversational AI testing. By combining LLM integration, role-based testing, knowledge base validation, and comprehensive monitoring, it provides developers with the tools needed to build robust and reliable chatbots.

Whether you're developing customer service bots, virtual assistants, or specialized AI applications, this testing platform ensures your chatbot delivers exceptional user experiences while maintaining accuracy and reliability.

Start testing your chatbot today and discover how comprehensive testing can transform your conversational AI development process.

Ready to elevate your chatbot testing? Contact our team to learn more about implementing advanced testing strategies for your conversational AI projects.